An Interview with Scott Alexander

Disambiguating AI, brain emulations, Scott’s writing schedule, figuring out life with newborn twins, and a whole lot more.

Scott Alexander is a practicing psychiatrist at Lorien Psychiatry, and the creator of Slate Star Codex and its successor blog, Astral Codex Ten.

His blogs are a fluid-like-substance that make the entire solid world porous, they rush into every noetic nook and crevice and cranny and leave no idea untouched and unpolished by their movement. Or they’re like a pair of borrowed eyes from a friendly tetrachromat, a person with an extra color-perceiving cone in the retina. Before, you only saw a few shades here and there, but you always managed to be a person of great taste and aesthetic sensibility and knowledge, you’ve always appreciated your own vision. But suddenly, now, you see what’s right above you, you’re in a world of novel incandescence, new light, you’ve hit peak blogosphere, you’re truly online now.

This is what the internet was always meant to be. A resource for learning. It’s so simple, but we forget! Scott Alexander reminds us with his writing, which covers everything from artificial intelligence to abolishing the FDA to fixing science to his presidential platform, and it’s something that hundreds of thousands of people (probably way, way more) are thankful for. If you’re one of those hundreds of thousands of people you’ll probably like the conversation that follows. And if you’re new to the party I’m very happy you’re here and interested to know what you think.

If you enjoy this conversation consider subscribing for future interviews.

Sotonye: On disambiguating AI

I haven’t seen anyone clearly detail a way to think about what current AI’s actually are on a level of category beyond comparison with other current AI’s or hypothetical future AI’s of vaguely similar types.

The closest thing I’ve seen to some kind of categorization is early gpt being compared to a kind of google if it gave answers in natural language, and this helped disambiguate the whole matter a bit for a little while. Until a new, bigger, badder version of the language model was announced. Nearly every announcement of a new version of a large language model follows with an added layer of ambiguity over what AI actually is, even though in every case the AI is still just a language model.

People (myself included) freak out in the same way they freak out when the moon reaches its perigee or takes on a deeper shade of red during a lunar eclipse, even though it’s still just the moon. It’s sort of surprising no one has outlined what type of thing LLM’s are even though we have a pretty good grasp of their ethology.

My question is: what kind of things are they? What is the best way to think about their categorization? The most common ways categorization of new digital technologies seem to develop are through their impacts on or relationship with human cognition, like we rarely separate Twitter from the idea that it’s an insanity/rage/political bifurcation-mill for example, and through their utility in consumer markets, like I think smartphones now sit pretty snugly between the entertainment and shopping system categories and the tech investment ecosystem there seems to track with this idea. But what is the best way to categorize LLMS? What categories can we actually put them in to dispel some of their “otherness”?

Scott:

A big blob of undifferentiated cerebral cortex, ripped away from all of the brain's specific structures and stuck in a vat.

According to the predictive coding (https://slatestarcodex.com/2017/09/05/book-review-surfing-uncertainty/) model, the brain is trying to do what LLMs do - we predict (https://slatestarcodex.com/2019/02/28/meaningful/) the next piece of sense-data in the same way it predicts the next token. It's using a structure a lot like LLMs - a neural network, although of course this is a vague term and there are lots of specific differences. I think they're basically the same type of thing doing the same kind of work.

There's some evidence that the average blob of cortex is generic and doesn't really care what it's doing. Blind people can use what are usually visual areas of the brain for other things. Some people who get strokes that affect their language centers learn to use other parts of the brain for language. If during development some mad scientist attached the ears to the visual cortex and the eyes to the auditory cortex, it probably wouldn't work exactly equally well, but it would probably work a little, because aside from some specific optimizations, each bit of cortex is just a generic blob of cognition which sets itself to predicting whatever input gets fed into it.

(Neuroscientists are probably screaming right now. Sorry, I mean "in the big picture".)

If you ripped a random blob of cortex out of the brain, you would separate it from the hypothalamus and basal ganglia and all the other structures that give it desires and planning ability and goal-directed action. You would separate it from the hippocampus and all the other structures that give it long-term memory and stable selfhood. You'd separate it from the sensory and motor cortices that embody it and connect it to the world. And you would break the circuit that goes through the dorsolateral prefrontal cortex and helps it stay consistent and world-model-y and "awake". You'd just have a sort of diffuse generalized cognitive tool where you'd feed data into one end and get patterns and predictions about the data out of the other.

(If you really want to know what it's like to be a blob of cognition detached from higher brain tissues, fall asleep and dream. AI makes some of the same mistakes as dreaming humans - if you look at the lucid dreaming community, they've been saying forever that the two easiest ways to realize you're in a dream are mangled hands and mangled text. AI also mangles these things, because they require some of the most processing and top-down modulation from higher-level world-models, and that seems to involve either a higher-scale connected network or something special about the dorsolateral prefrontal cortex (https://www.astralcodexten.com/p/somewhat-contra-marcus-on-ai-scaling).)

AI is like one of these detached blobs of brain tissue. This is why I was arguing (https://slatestarcodex.com/2019/02/19/gpt-2-as-step-toward-general-intelligence/) as early as 2019 that the GPTs were a big step towards general intelligence. We call them "language models", but as we've seen, with slight tweaks they can learn to generate images / video / music, play games, and possibly (I'm not really up to date on this research) handle movement for embodied robots. The exact structure of LLMs is optimized for language but the same general class of things is going to be able to do whatever human brain tissue does, because it's overall a similar type of thing. You just need to find a way to plug the right input in and reinforce success (I say "just", but obviously this will take billions of dollars, and work by thousands of people who are much smarter than I am).

This is why I expect AI to become human-level or beyond pretty fast. Making a cerebral cortex at all is the hard part. After that, you just need to scale up, and hack together equivalents for other useful brain structures (I say "just", but see above).

Disclaimer that I'm not an AI expert, and all of this is speculation.

Sotonye:

This is by far the best disambiguation of the matter I’ve seen. What AIs are are self-contained slices of digital brain regions. This category sounds very much right to me. Chatgpt is like the left fusiform gyrus disconnected from everything else, dedicating all its electric pulses and sparks to translating symbols into meaning, Midjourney is like a lateral prefrontal cortex floating in a server farm, dreaming up vivid new worlds, and so on. This feels right and it also flows well into my next question! I’ve always assumed that whenever we think about an extremely advanced AI we’re thinking of a really high-fidelity brain emulation, and you’ve confirmed for me that this assumption is probably right. We’ll get goal-directed AI when we connect LLMs to large basal ganglia models, and AI will dream when digital prefrontal and posterior parietal cortices are linked with something like diffusion. Full-scale high-fidelity brain emulations are where the terms of category for current AIs seem to take us, and I think this makes the total risk profile of AI a little more clear.

We don’t have to worry about powerful language models like gpt-n, we have to worry about digital people who can think a million times faster than von Neumann. I imagine that when something like this speaks to you all information flow would basically be from the future. It could beat any market with relative ease, could know thousands of years of future events before breakfast. But could it really go rogue in any way harmful to most people if its core intelligence fits inside general human evolutionary boundaries? This is something I’ve never seen anyone describe. If we modeled an AI on myself for example and scale its reasoning parameters up by an order of magnitude, it would probably still just want to interview you and walk my dog Hazel. Would all the goals worked into the brain by evolution and inheritance make their way into simulated brains, or would scale relegate them? What’s the best way to think about the future risk of full brain emulations?

Scott:

Thanks. You take this a different direction than I do, though. I don't think AI is emulating the brain on purpose. I think a chunk of cortex is just the best metaphor we have for abstract purposeless cognition. Although all of AI owes something to brain imitation, I think mostly the inventors of LLMs weren't doing that on purpose, the inventors of the next advance probably won't be doing that on purpose, and the next advance won't necessarily resemble the brain except insofar as the brain is still the best metaphor we have for cognitive processes.

We have very weakly, partially goal-directed AI already, in the form of RLHF. I don't know how this fits with what I just said about the brain. The brain does a lot of reinforcement learning, but the exact way that LLMs do reinforcement learning seems almost on a different level.

I would separate "goal directed AI" from "agentic AI". ChatGPT is "goal-directed" in that it has a goal of giving you a helpful answer, but it lacks some features of agency like generality and time-binding.

The closest thing I've seen to agentic AI so far is AutoGPT, which is just GPT plus a wrapper that prompts it with things like "Make a list of ways to create a successful business", "Okay, how would you do Step 1 on your list", "Okay, now try doing that thing", and so on. Connect this to some sort of output channel (like a GMail client that sends an email whenever GPT says the word [SEND]) and you can sort of imagine a hacked-together super-dumb agent. My guess is that we get agent-like AIs from starting with something like this and gradually making it more elegant and integrated, not by deliberately setting out to replicate the basal ganglia (which we only half-understand anyway). I don't expect high-fidelity brain emulations, because we don't understand the brain well enough on any level, it will be decades before we do, and it's much easier to just take the transformer we got through "divine benevolence", scale it up a bit, and add stupid hacks to it until it works.

The other possibility is that we don't have to do any of this, because the basic AI training process - where you stick data in one end, something you want in the other end, and do gradient descent - will automatically produce all these structures and functions for us if we get the input and output channels right and have a big enough parameter count. We didn't design image AIs by studying the occipital lobe, or LLMs by studying Broca's area. If we can figure out how to do some task-based form of AI training, the relevant structures will automatically arise inside the AI. I don't know how to do this, because there isn't obviously a corpus with a petabyte of agentic tasks in the same way there's a corpus with a petabyte of text. Maybe something something simulations? DeepMind has some older work on this, although they've pivoted to LLMs like everyone else. I don't know, if I was any good at predicting this kind of stuff I'd be at OpenAI making $800,000 a year. I just think there are plenty of options.

In terms of AIs going rogue, I don't have strong reason to think that they will, and my concerns are more than this seems hard to predict and even a small chance would be bad. But you could tell two stories.

In one, AI really is very different from humans. Humans evolved their desires piecemeal, first by creating a lizard brain, then by creating a monkey brain that tweaks the lizard machinery, then by creating a human brain that tweaks the monkey machinery. We don't optimize for goals like reproduction directly (eg donate to sperm banks in order to reproduce) because you couldn't run that kind of algorithm on a lizard brain, and the human brain is built on top of the legacy hardware. So instead you get all of these instincts and desires, and sometimes you can weakly and inconsistently use Reason to interrogate them. But if you're training an AI on one of these agency tasks, you might have human-level cognition before you even start adding in agency, and then maybe you do get some novel motivational architecture that just optimizes the goal directly. I'm not sure about this - even gradient descent might start from the simplest thing it can get and build up - it just seems like a different process. Or maybe it's not gradient descent at all, but it's something else where the AI is just really different than you thought it would be - alien hardware beaten into a human form, like the shoggoth-smiley-face meme - and at some point the real architecture comes out and does something you're not expecting.

In the second story, AI works exactly like we think it does and is very human. I believe you when you say that you're a nice person who just wants to walk dogs. But the history of multiracial countries where one race has much more power than the other isn't consistently great. If we think of AI as a new race - a race much more different than humans than any human race is from another, such that all of the racist tropes about how Race A is always X but Race B is always Y are totally true - then maybe somewhere along the line that leads to conflict. If I were those Sentinel Islanders who had never contacted the rest of the world, and the Indian Navy came knocking on my door and said "Hello we have nuclear bombs and a GDP of $3.7 trillion, we've decided to integrate our two societies", then I won't say for sure that this would end with every Sentinelese person dead. But I would think they would have reason to be nervous, especially if they like their current lifestyle or having some form of self-determination. I think it's plausible that over the course of just a few years, we get hundreds of millions of agentic AIs who are smarter than we are. If they want to keep writing our high school history essays for us, great. Otherwise - well, I prefer not to think about the otherwise; I'd rather just work on ensuring we live in the world where they want to keep helping us.

And this is mostly unrelated, but I just learned it and I think it's interesting - it's not clear that AI will think a million times faster than we do. Current AIs think at rates that are pretty close to humans, and future ones might invest processor gains into being more intelligent and continue thinking at near-human rate.

Sotonye: On having good priors

Your priors for thinking about this sort of thing float a head and shoulders above a huge number of experts I’ve seen over the years. It reminds me of a quote from Hanania in an interview he did with Harvard. He described how making sure you have good priors is more important than domain expertise for general problem-appraisal because. for the most part, it’s just not possible to read widely on every subject, and it’s also possible to read widely, acquire domain expertise, and still draw short inference straws with the wrong initial assumptions. Here’s the quote, I think you’ll like it:

“When it comes down to it, we’re all operating off a few priors. There’s a few priors that we all have that are really doing the heavy lifting. To the extent that we’re aware of that and we’re questioning those priors and thinking about whether they make sense, I think that has the most return to your time and mental energy.

So, for example, in economics, my prior is that markets are better than central planning. If I want to know about some new stimulus bill that comes up, I could go read the 1000 pages and try to track down every claim that every researcher makes, but that’s not a good use of my time. The good use of my time is figuring out why I have this prior that markets are better than central planning, seeing if it’s correct, and looking at the alternative evidence. If it is correct, I think I can have a pretty good view on the bullet points of the stimulus package, and then it’s going to be broader, and it’s going to help you think about other things, too.

. . . acknowledging priors and questioning them—and making sure you have the right ones—is an important thing.”

How do you make sure you have good priors for things like existential and personal risk, and other broad, important, daily thoughts like career choices and romantic partners?

Scott:

The best answer I've found to this question is the one in Eliezer Yudkowsky's Intuitive Explanation Of Bayes' Theorem, which I reproduce below:

I think the reason people are so cagey around this issue is that - well, in one sense it's easy to answer your question, and the answer is exactly the kind of things you talk about. You look at the lessons of history, at problems that have already been solved, and at the things that the people you trust believe - and you try to learn general cases. I have no secret method beyond these.

But in another sense, all of this is terrible. There's no clear distinction between a prior and a bias. I too have a prior that markets are better than central planning. Does this mean anything different from the accusation "you're biased in favor of markets"? I get my priors from historical lessons and the people I trust - but what lessons I draw from history, and which people I trust, are themselves the end result of biases. It's all a ratchet.

I think the solution is that each step of the ratchet can either improve or worsen the information:bias ratio. If each step gives you a little more real information, you end up with a lens that successfully sees its flaws and overcomes the original bias. If each step gives you a little more bias, you eventually end up with a trapped prior.

There's no royal road here, except to try your best to expose yourself to unusual sources of information, to read arguments for sides you don't like, to make predictions to keep yourself honest, and to be genuinely puzzled by awkward little flaws in your story. I also sometimes find it helpful to occasionally put a ridiculous amount of effort into some important question, such that you're trying as hard as you can not to rely on priors and just to get as much of the unfiltered evidence as possible. I think this can work better than spending the same amount of time to investigate ten questions shallowly.

Sotonye: On Scott’s writing process

That last bit you just said reminds me of something that just happened today. Are you familiar with the Doomberg Substack? I just interviewed him a few hours ago and mentioned you and your work in our conversation. He reminds me a bit of yourself, and something he said about his workflow as the editor in chief of Doomberg made me wonder about your own. He said that he writes every night usually between 2-4am when his brain tends to fire on all cylinders. What pattern has your research/professional work as a psychiatrist/writing workflow taken over time? How do you decide what to write, when to write it, and muster the motivation to make your digital pen dance to the drum of your ideas?

Scott:

I'm afraid I haven't read Doomberg yet. I'll have to check out the interview when you publish it.

Judging from X/Twitter, from comment sections, and from personal conversations, the average person has no shortage of ideas they're excited to talk about. I'm the same, but I get nervous that a short comment wouldn't present my case clearly, so I end up having to expand it into a long essay. Usually I have some point I want to make, I fret a lot about how to say it, and by the time I actually write it down I can mostly copy the form it's settled into in my head, filling in the gaps as I go. When that doesn't happen - when I'm trying to write the sentences for the first time as I type, instead of putting on paper what I've already been thinking - then it goes slower and comes out less well. But sometimes it can't be helped.

It sounds like you're collecting anecdotes about the quirks of different writers in the hope of finding out something interesting about the writing process. The main quirk I can contribute is that I can only work in a completely perfect environment. A nice ergonomic desktop, a big block of time when I won't be interrupted, and zero noise - even street noise is enough to scramble my thought processes. If I didn't have a loud fan and a good white noise machine, I'd have to switch to some other career. I'm afraid that most of the times I act like a jerk and snap at people unfairly happen when they interrupt me in the middle of writing.

And yeah, night is pretty good for this. You'll notice I'm sending you this email around 4:30 AM. My wife and I are splitting childcare - she takes day, I take the night. So far it's going well. The kids wake and need something once every few hours. Otherwise it's nice and quiet. And I have a great excuse for turning down all my social invitations.

Sotonye: On Scott’s newborns

I don’t have the awesome duties of having children yet even though I really want to, but I also end up writing very late everyday, too. I’m not sure how anyone who writes writes without a world of perfect order, which is what I get here in Los Angeles when everyone besides the creepy barn owl in my neighborhood is motionless in sleep. I miss covid sometimes because of the huge blanket of quiet draped across these roads and skies!

But I really want to know more about your brand new life as a dad. What books, tools, strategies, are you employing toward life with newborns? I’m almost 29 and I’m hoping to start my own family soon, but I’m not sure why I fear the newborn stage so much. It’s shocking to me that there’s no instruction manual inside the placenta.

Scott:

I don't know how much useful advice I have to give. My twins are two months old. All I can say is that I've successfully kept them alive so far. And even there most of the credit goes to my wife, who has helpfully explained how all my innovative parenting ideas "are insane" or "would obviously kill the kids".

I really don't know. Right now it's all a blur. Still, they're good babies.

There's a strain of pronatalist argument that goes that people should be less worried about having kids, less stressed over picking the perfect partner, less neurotic about waiting until they've achieved exactly the right amount of career success. I understand it's well intentioned. But I did the opposite - waited until everything was perfect before having kids - and I can't imagine trying to raise them with fewer resources than I have right now. My wife is the perfect partner, and she's willing to be a stay-at-home mom at least for the first few years, and I work from home and set my own hours, and we make good money, and we have amazing support from family and friends and neighbors - and there still are nowhere near enough hours in the day. I read Bryan Caplan, so I know you just have to do the bare minimum to keep kids alive and happy, and you don't need to fret about the Baby Mozart Einstein Lessons stuff. But it turns out that the bare minimum to keep babies alive and happy is a lot! God only knows how the actual tiger mothers manage!

Fine, actual useful advice. If you have twins, force them onto the same schedule for feeding/changing/etc, otherwise you'll never get a moment off (this is harder than it sounds; they look so peaceful when they're asleep). Just as you should assume all guns are loaded, you should assume all babies are about to vomit on you. Get very good at swaddling. There is a Baby Maslow's Hierarchy Of Needs with nursing / burping / changing at the bottom, rocking in the middle, and if anyone ever figures out what's at the top they should let me know. You will need more burp rags and bibs than you think. No, even more than that. The Uppababy modular carseat/stroller system is expensive but very good; it removes one tiny source of friction from your life, and oh God will you be grateful for slightly fewer tiny sources of friction.

I don't regret anything. But I don't have much coherent advice. Ask me again in five years.

Sotonye: On depression and modernization

I’ve wanted to ask you for a while about the rising rates of depression in advanced countries and some possible causes. It’s a really interesting problem and there are a few theories I really like about why it happens, like the predictive processing model you’ve written about. Maybe modern environments expose us to something that throws off our top-down higher order confidence in predictions about bottom-up sense perceptions, sort of in the way that the shift to agriculture 10,000 years ago exposed us to something that caused the rise of homosexuality. Maybe modern environments have an unusual chemical or social factor that makes us worse off.

Maybe modernization and the rise of the mental health profession primes people with unusually strong higher-order predictive processing to overrate the importance of mental disorders in their own self-assessments, like when anorexia becomes more common in places it never was after the concept is introduced.

Or maybe it all has very little to do with modernization at all, which is what I was thinking the other day after reading about the effect of religious participation on depression and suicide. The literature reviews I read found no relation between religious involvement and major depression or suicidal ideation, but they did find that involvement was protective against suicide attempts. Which makes it sound like modern life erasing “third-places” and communities doesn’t really drive despair, but that low-level depression and suicidality might actually just be normal in human beings. What are your thoughts these days about why we’re increasingly depressed? What can we do for the depressed people in our lives?

Scott:

These kinds of questions are tough because we're not good at measuring the true underlying rates of mental illness. Depression diagnoses have gone up, but this could be because people are more likely to go to the doctor for mild cases. I got a strong sense of this when I did my training in Ireland, which at the time was about 25 years "behind" the US culturally. People there thought of depression as something that would probably land you in the psychiatric hospital - an extreme, life-ruining condition that made you totally different from normal people. As time goes on, opinions change, and pharma companies produce better commercials, we shift our diagnostic line closer and closer to ordinary unhappiness. We've seen something similar with autism.

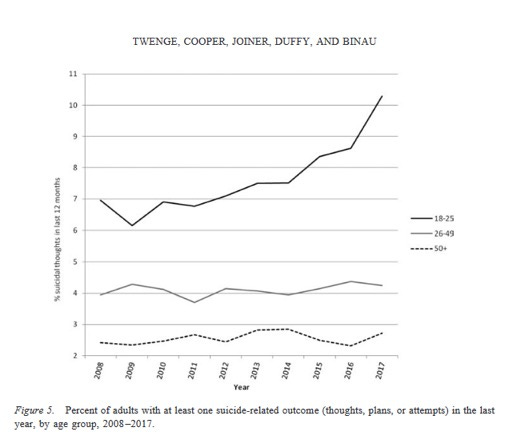

So have underlying depressive symptoms really gone up? Studies are, of course, mixed - you can find an overview in the paragraph beginning with "the increased availability" at https://www.sciencedirect.com/science/article/pii/S0272735821001549#bb0525 . Without giving a full justification, I'm going to say I mostly believe some of the findings in https://www.apa.org/pubs/journals/releases/abn-abn0000410.pdf, which show that:

The two things I notice here are that increasing depression is almost entirely concentrated among young people, and that it's going up really fast. I don't think this supports theories around modernization, social decay, changing attitudes to mental health, etc, which have all been happening a long time and affect everyone equally. The only answer that really fits is social media. Studies are really mixed about this - see https://inews.co.uk/news/technology/dont-panic-about-social-media-harming-your-childs-mental-health-the-evidence-is-weak-2230571 - but I can't think of what else has been changing that quickly. Probably there are ideas about identity politics and global warming apocalyptism and fetishization of mental health issues that are contributing, but I don't know why those would all be coming up now and hitting young people so hard all of a sudden if not for the Internet.

Sotonye: Final question

I’d like to know more about who Scott Alexander is, I feel no one has really asked. There are huge gurgling waves of assumptions about you crashing and swirling around online, they’ve washed out anything reasonable about the question. But I really want to know. Before typing out this question I spent some time reading your novel “Unsong” to get a better image of things, and on reading it I felt maybe you’re just like me. There seems to be a deep spiritual passion, and an interest in Jewish mysticism and philosophy. It reminded me a lot of myself. I’m not Jewish but I converted to Judaism a long time ago after trying every religious and mystical system you could name. At one point I was probably the best meditator for my age in North America, I poked and prodded reality where I could, things got paranormal and scary but I was really looking for something more, I had to. I feel that you’re the same way. I want to know about this side of Scott Alexander because I think it’s a very deep part, and if you can, I’d also just like to know more about your personal thoughts and habits around health, diet, and maybe a little about your interest in writing fiction. Tell me about being Scott Alexander.

Scott:

You're Karaite, aren't you? I've been thinking about them a lot recently. I'd like to be more observant, but the prospect of following Jewish law in its entirety feels overwhelming, and picking and choosing feels too unprincipled. I was hoping there was some option to start with Torah law and worry about the rabbinical prohibitions later, but apparently that's "heretical", plus the Karaites have complex traditions of their own and aren't just Judaism lite. I'm not sure; good work figuring out something that works for you.

I went through a spiritual/paranormal phase in college, but was never able to get anywhere. At some point I talked to a friend who was doing ritual magick and seeing some really freaky stuff. I tried exactly what he tried and got nothing, probably because even when I bang on my brain really hard and shout "HALLUCINATE, GOSHDARNIT!" I just don't have enough trait psychoticism to make it work. I've experimented with meditation, Alexander technique, yoga, hypnosis, etc, and dropped out of all of them through some combination of too-low-willpower-to-stick-with-it-long-enough and too-low-openness-to-get-anything-from-it. Guess I'm doomed to be a perfectly normal person with no connection to the spiritual world, and I accept that. Luckily I feel like life is pretty good and meaningful even so.

(I also tried drugs briefly, but had the opposite problem: I have such extreme reactions to them that it's probably not a good idea to push that door too hard)

I can't really speak to fiction. I'd love to write more, but it's like everyone says - you take whatever the Muse gives you and you're grateful for it. Totally different from nonfiction where you can occasionally write things yourself even if the Muse isn't around that day. I have some projects, but I'm supersitious about revealing anything before it's done. If the Muse comes through for me, you'll see eventually.

As for who I am, I'm afraid I'm pretty boring. I'm introverted and will take any excuse not to leave my house (babies are great for this, by the way - "sorry, I'd love to hang out, but you know how hard it is to find childcare"). If I hadn't found a community I really vibe with - and gotten a popular blog as an alternative to having social skills - I'd probably be a total shut-in. I once proposed the gravity model of friendship: the amount of time I see someone decreases with the square of the distance between our houses. Luckily there's a big rationalist group house four houses down from me, full of some of my oldest and dearest friends. They're always doing things and having people over, so I use them as a prosthetic add-on social life.

Otherwise I can't stress enough how boring I am. I wake up at 6 PM every evening, have an impossible meat burrito at about 10, cereal at 5, then go to sleep at 8 AM. If it's a workday, I wake around noon to videoconference with a few patients, then go back to sleep. I bake a loaf of bread every Friday. I'm technically poly, but never get around to arranging dates with my other partners, except for one who comes over 7:30 PM every Monday night to help with the babies. Aside from that, anyone who reads my blog already knows everything I spend my time doing and thinking about.

Here's a new parent bias: you imagine that your children are more normal than they are.

For example, with neither of my children did I need much in the way of burping rags. There's all kinds of things that you imagine are universal experiences but it turns out are idiosyncratic to your children.

My cousin had a child about the same time as my first child. When our children were both about two, he asked me, "How do you deal with the biting? Any tips?" And my answer was, "Uh, my daughter doesn't bite people." Unspoken -- BECAUSE I'M A GOOD PARENT.

Three years later, when my second child was two, OH, THAT'S WHAT HE MEANT. All this stuff that we thought we had figured out and nailed down with child 1 it turned out no, she was just predisposed to be easy for that.

"I wake up at 6 PM every evening, have an impossible meat burrito at about 10, cereal at 5, then go to sleep at 8 AM."

So, Scott, you're totally nocturnal!? Is this related to the twins, or do you just like it that way?